Home » AI and pharmacy practice — how did we get here and where are we going?

Artificial Intelligence (AI) is revolutionising all areas of the healthcare industry including the pharmacy sector. In this article, Frank Bourke, Learning Technologist at IIOP explores AI’s history, its core components, and its adoption and application in global pharmacy. It also examines ethical concerns and the necessary safeguards to ensure responsible use of (generative) AI in day-to-day pharmacy practice, as well as a look at AI’s promise and its direction of travel.

The integration of Artificial Intelligence (AI) into pharmacy and the broader healthcare sector represents a transformative journey, enhancing efficiency, precision, and personalised care. But how did we get to here? Throughout its development, AI’s role in pharmacy has grown from foundational research to practical applications in drug discovery, personalised medicine, patient care, and operational efficiency:

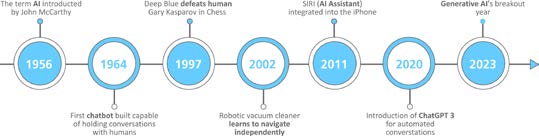

Figure 1: Significant milestones in AI’s general development

The future of AI in pharmacy promises even greater advancements, with potential impacts on global health challenges, vaccine development, and the continued evolution of personalised medicine.

This definition of AI is from AI itself: “AI refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include learning, problem-solving, speech recognition, and decision-making. AI aims to simulate intelligent behaviour and improve performance over time through data analysis and machine learning algorithms”.

At its highest level, AI comprises three core components: computational power, data resources and machine learning algorithms. Computational power is its brainpower, crunching numbers and solving problems quickly. Data resources (sometimes referred to as ‘big data’), is like a massive library of information from which AI can learn/train on. And machine learning is its ability to get better with experience, like predicting what you might like, based on what it has learned previously.

Traditional AI uses its computational power, learns from a huge library, and improves itself over time to assist us in various tasks, and make things more efficient and helpful. However, it doesn’t generate entirely new content, but rather makes predictions or classifications based on the patterns it has learned. Email spam filters, for example, use non-generative AI to identify and filter out unwanted emails based on patterns learned from labelled examples. It is also commonly used in predictive text suggestions, for example on your phone or in Microsoft Word documents, predicting the next word in a sentence based on context and patterns from previous text inputs.

Generative AI, on the other hand, is more creative and can ‘generate’ entirely new content. It generates data that wasn’t in the training set, whether it’s images, text, or other types of content. For example, OpenAI’s ChatGPT is a powerful language model capable of generating coherent and contextually relevant text passages. And DALL-E, an artificial intelligence program also developed by OpenAI, can generate digital images from natural language descriptions (user prompts).

Both non-generative AI and generative AI are considered to be weak, or narrow AI. Although Gen AI can generate original content, it is still performing single tasks, such as responding to a text prompt or responding to voice prompts. Whereas to be considered strong AI, or artificial general intelligence (AGI), AI needs to have the full range of human capabilities, such as reasoning, talking, and even emoting.

ChatGPT is an implementation of a large language model (or LLM) developed by OpenAI. GPT stands for Generative Pre-trained Transformer. It has been trained on a diverse and extensive dataset, up to 2021. This training data consists of a wide range of sources, including websites, articles, books, and other text available on the web. ChatGPT, or other similar language models, can be used to assist in understanding complex topics, as a writing assistant, or creating text for various purposes, including planning, and generating content or ideas. Here’s a sample of the types of user prompts used in ChatGPT:

You’ll need to have an OpenAI account to use ChatGPT. Go to https://chat.openai.com and select Sign up to create an account. You can also select Continue with Google/Microsoft to create an account using your existing Microsoft or Google account. But you’ll need to select Sign up first.

Begin with simple queries to understand how ChatGPT responds. For example, ask it to explain a concept, provide a summary of a topic, or help with a simple task.

Experiment with Prompts: Try different types of prompts to see how ChatGPT can generate diverse responses. This could include creative writing, answering hypothetical questions, or providing explanations.

Getting started with ChatGPT is about exploration and learning by doing. As you become more familiar with the tool, you’ll discover innovative ways to apply it to both personal and professional tasks.

Recognise that while ChatGPT is powerful, it has limitations, such as a knowledge cutoff in April 2023, and it might not always provide perfect answers, or indeed wholly correct ones. If in doubt, check its responses against other sources.

So, we’ve seen AI at work in pharmacy, in other industries and we’ve seen some examples of using generative AI such as ChatGPT to help with text processing and idea generation. However, AI is not without its challenges. There are legitimate concerns about accuracy, inherent biases, and of course fears around job displacement, data privacy and other ethical concerns and phenomenon such as AI hallucination, for example where ChatGPT generated a fake data set to support it scientific hypothesis.

So how can generative AI such as ChatGPT get it wrong:

Clearly the use of generative AI applications such as ChatGPT, is not a recommended or viable replacement for traditional pharmacy practice. It’s fine for some admin tasks or for helping with ideas for non-pharmacist-based tasks. Pharmacists are the professionals, Generative AI is not, no matter how plausible or convincing as it may seem.

Artificial intelligence systems, particularly those based on machine learning and natural language processing (NLP) technologies like ChatGPT, can generate or output information that is incorrect, fabricated, or not grounded in reality. This can occur despite the AI having no intention to deceive, as it lacks consciousness or intent. Instead, hallucinations are a byproduct of the AI’s training process and its attempt to make sense of or generate content based on the patterns it has learned from its training data.

AI hallucinations can manifest in various ways, such as:

The pace of AI is truly astounding. We’ve gone from pocket calculators to generative AI in a few decades. Today, we’re not so far off the next great milestone (estimated to be five to eight years away), General Artificial Intelligence, where AI is expected to be at least as smart as humans, if not smarter. And then there’s the advent of Super Artificial Intelligence. To paraphrase Stephen Hawking, Super Artificial Intelligence is to mankind what man is to snails! Some experts even predict that ‘the singularity’, the moment when artificial intelligence surpasses the control of humans, could happen in less than a decade (by 2031). But of course, it’s not all positive.

The area of security and privacy should be very familiar to us all given our reliance on the ‘internet of things’, and the waves of online scams and phishing attempts that most experience weekly. Much fraudulent activity is facilitated and executed by AI bots. There are two additional security and privacy areas that are growing rapidly and have the potential to profoundly affect wider society, and indeed on the reputation of AI itself. AI algorithms can be used to impersonate individuals or create synthetic identities. Identity theft through AI can lead to unauthorised access to personal information, financial fraud, and reputational damage. It is becoming more and more challenging to distinguish between genuine and synthetic content.

Artificial Intelligence can also be used to generate realistic-looking news articles, blog posts, or social media content. Advanced natural language processing models, like GPT-3, can generate coherent and contextually relevant text that might resemble legitimate news. Fake news generated by AI can be used to spread misinformation, manipulate public opinion, and influence political discourse.

Though it can sometimes seem like it, AI news is not all dark. Measures and safeguards are continually prepared and updated to counteract the negative applications of AI. Organisations that develop and deploy AI systems also have ethical and legal responsibilities for the outcomes of their AI systems. Regulatory compliance must ensure that these responsibilities are met, promoting transparency and accountability. Some regulations require that AI systems provide explanations for their decisions. This transparency helps build trust and ensures that individuals affected by AI decisions can understand the reasoning behind those decisions. Many AI systems rely on large datasets, often containing sensitive personal information. Regulations such as the General Data Protection Regulation (GDPR) in Europe or the Health Insurance Portability and Accountability Act (HIPAA) in the United States, mandate strict controls over the collection, storage, and processing of personal data.

Predicting the future of AI is difficult and history is littered with blind alleys that science failed to visit. As Arthur C Clarke once famously said, “the more unbelievable the prediction, the more likely it is to happen”. But it may be useful to draw a parallel between the discovery and application of electricity to that of AI. Not long after the first light bulb went on, electricity gave us, on the one hand, the exciting possibility of creating life via Mary Shelly’s novel, and on the other the sobering reality of destroying life via the electric chair. Yet, electricity has led to great advances in science and technology and in our daily lives. And so, with AI, there will be lots of positive developments as well as some negative ones, including outlandish claims and promises, and no doubt a few unintended consequences.

So, as a species expect increased integration of AI in everyday life, advanced generative AI capability including personal (and customisable) assistants in education and training, next level human-AI interaction and collaboration, such as Rabbit AI interfaces and wearable AI devices, and increased speed and efficiency via quantum computing.

What may this mean for pharmacists? According to the American Society of Health System Pharmacists (ASHP) in its 2020 Statement on the Use of Artificial Intelligence in Pharmacy:

“Pharmacists will be necessary in leading innovation on how AI models and technologies are developed, validated and activated to enact change. Further, pharmacists must be poised to capitalise on the operational gains and enhanced clinical guidance made possible by AI technology to enhance patient care. To carry this out, pharmacy needs to continue to build on education that will enable current and future generations of pharmacists and pharmacy technicians to shape the evolution of AI technology. The scope and impact of changes to come will cross all aspect of pharmacy practice, requiring continued engagement by all in the field”.

It’s certain that pharmacists, like other professions, can look forward to an increase in AI in the work environment. As AI becomes more reliable and embedded into day-to-day practices, standard pharmacy operations will become increasingly automated, allowing pharmacists to focus less on repetitive tasks and more on high-value patient care activities. For example, more efficient clinical trials, further advances in drug development and efficacy (both linked to genetics), the use of sentiment analysers to recognise and handle patient queries, automated patient support via dedicated pharmacy chatbots or personal assistants, automated patient monitoring and medications adherence, as well as more data-informed patient health and proactive interventions. AI also has the potential to transform pharmacies into health management centres, impacting value-based care.

Despite facing many challenges, AI promises a bright future for the pharmacy industry. As AI technology develops, its capabilities will continue to expand, driving innovation, and refining AI tools to meet specific pharmacy needs.

Further reading options and references are available on request — email ipureview@ipu.ie for a list from the author.

Interested in AI and pharmacy?

Make sure you check out the April 2024 IPU Review, which will include an article on ‘The potential for AI in community pharmacy’, from management of antimicrobial resistance to providing reliable health information, authored by Mark Kelly, Senior Manager in PwC’s HealthTech practice.

Frank Bourke

Learning Technologist, IIOP

Highlighted Articles